2016-03-31 08:08:45 -04:00

|

|

|

#

|

2023-11-21 15:29:58 -05:00

|

|

|

# This file is licensed under the Affero General Public License (AGPL) version 3.

|

|

|

|

|

#

|

2024-01-23 06:26:48 -05:00

|

|

|

# Copyright 2020 Sorunome

|

|

|

|

|

# Copyright 2016-2020 The Matrix.org Foundation C.I.C.

|

2023-11-21 15:29:58 -05:00

|

|

|

# Copyright (C) 2023 New Vector, Ltd

|

|

|

|

|

#

|

|

|

|

|

# This program is free software: you can redistribute it and/or modify

|

|

|

|

|

# it under the terms of the GNU Affero General Public License as

|

|

|

|

|

# published by the Free Software Foundation, either version 3 of the

|

|

|

|

|

# License, or (at your option) any later version.

|

|

|

|

|

#

|

|

|

|

|

# See the GNU Affero General Public License for more details:

|

|

|

|

|

# <https://www.gnu.org/licenses/agpl-3.0.html>.

|

|

|

|

|

#

|

|

|

|

|

# Originally licensed under the Apache License, Version 2.0:

|

|

|

|

|

# <http://www.apache.org/licenses/LICENSE-2.0>.

|

|

|

|

|

#

|

|

|

|

|

# [This file includes modifications made by New Vector Limited]

|

2016-03-31 08:08:45 -04:00

|

|

|

#

|

|

|

|

|

#

|

2018-03-13 12:57:07 -04:00

|

|

|

import abc

|

2016-07-26 11:46:53 -04:00

|

|

|

import logging

|

2020-08-20 15:07:42 -04:00

|

|

|

import random

|

2020-06-16 08:51:47 -04:00

|

|

|

from http import HTTPStatus

|

2023-10-16 07:35:22 -04:00

|

|

|

from typing import TYPE_CHECKING, Iterable, List, Optional, Set, Tuple

|

2016-03-31 08:08:45 -04:00

|

|

|

|

2019-07-29 12:47:27 -04:00

|

|

|

from synapse import types

|

2021-08-04 13:39:57 -04:00

|

|

|

from synapse.api.constants import (

|

|

|

|

|

AccountDataTypes,

|

|

|

|

|

EventContentFields,

|

|

|

|

|

EventTypes,

|

2021-09-06 07:17:16 -04:00

|

|

|

GuestAccess,

|

2021-08-04 13:39:57 -04:00

|

|

|

Membership,

|

|

|

|

|

)

|

2023-02-10 18:31:05 -05:00

|

|

|

from synapse.api.errors import (

|

|

|

|

|

AuthError,

|

|

|

|

|

Codes,

|

|

|

|

|

PartialStateConflictError,

|

|

|

|

|

ShadowBanError,

|

|

|

|

|

SynapseError,

|

|

|

|

|

)

|

2020-07-31 09:34:42 -04:00

|

|

|

from synapse.api.ratelimiting import Ratelimiter

|

2021-07-26 12:17:00 -04:00

|

|

|

from synapse.event_auth import get_named_level, get_power_level_event

|

2020-05-15 15:05:25 -04:00

|

|

|

from synapse.events import EventBase

|

|

|

|

|

from synapse.events.snapshot import EventContext

|

2023-09-15 09:37:44 -04:00

|

|

|

from synapse.handlers.pagination import PURGE_ROOM_ACTION_NAME

|

2021-08-23 11:25:33 -04:00

|

|

|

from synapse.handlers.profile import MAX_AVATAR_URL_LEN, MAX_DISPLAYNAME_LEN

|

2023-05-03 07:27:33 -04:00

|

|

|

from synapse.handlers.state_deltas import MatchChange, StateDeltasHandler

|

2023-08-16 10:19:54 -04:00

|

|

|

from synapse.handlers.worker_lock import NEW_EVENT_DURING_PURGE_LOCK_NAME

|

2022-08-03 13:19:34 -04:00

|

|

|

from synapse.logging import opentracing

|

2023-05-03 07:27:33 -04:00

|

|

|

from synapse.metrics import event_processing_positions

|

|

|

|

|

from synapse.metrics.background_process_metrics import run_as_background_process

|

2023-10-16 07:35:22 -04:00

|

|

|

from synapse.storage.databases.main.state_deltas import StateDelta

|

2021-06-09 14:39:51 -04:00

|

|

|

from synapse.types import (

|

|

|

|

|

JsonDict,

|

|

|

|

|

Requester,

|

|

|

|

|

RoomAlias,

|

|

|

|

|

RoomID,

|

|

|

|

|

StateMap,

|

|

|

|

|

UserID,

|

2021-09-06 07:17:16 -04:00

|

|

|

create_requester,

|

2021-06-09 14:39:51 -04:00

|

|

|

get_domain_from_id,

|

|

|

|

|

)

|

2022-12-12 11:19:30 -05:00

|

|

|

from synapse.types.state import StateFilter

|

2018-08-10 09:50:21 -04:00

|

|

|

from synapse.util.async_helpers import Linearizer

|

2020-09-09 12:22:00 -04:00

|

|

|

from synapse.util.distributor import user_left_room

|

2016-03-31 08:08:45 -04:00

|

|

|

|

2020-08-12 10:05:50 -04:00

|

|

|

if TYPE_CHECKING:

|

|

|

|

|

from synapse.server import HomeServer

|

|

|

|

|

|

|

|

|

|

|

2016-03-31 08:08:45 -04:00

|

|

|

logger = logging.getLogger(__name__)

|

|

|

|

|

|

|

|

|

|

|

2023-02-10 18:31:05 -05:00

|

|

|

class NoKnownServersError(SynapseError):

|

|

|

|

|

"""No server already resident to the room was provided to the join/knock operation."""

|

|

|

|

|

|

|

|

|

|

def __init__(self, msg: str = "No known servers"):

|

|

|

|

|

super().__init__(404, msg)

|

|

|

|

|

|

|

|

|

|

|

2020-09-16 15:15:55 -04:00

|

|

|

class RoomMemberHandler(metaclass=abc.ABCMeta):

|

2016-03-31 08:08:45 -04:00

|

|

|

# TODO(paul): This handler currently contains a messy conflation of

|

|

|

|

|

# low-level API that works on UserID objects and so on, and REST-level

|

|

|

|

|

# API that takes ID strings and returns pagination chunks. These concerns

|

|

|

|

|

# ought to be separated out a lot better.

|

|

|

|

|

|

2020-08-12 10:05:50 -04:00

|

|

|

def __init__(self, hs: "HomeServer"):

|

2018-03-01 11:49:12 -05:00

|

|

|

self.hs = hs

|

2022-02-23 06:04:02 -05:00

|

|

|

self.store = hs.get_datastores().main

|

2022-06-01 11:02:53 -04:00

|

|

|

self._storage_controllers = hs.get_storage_controllers()

|

2018-03-01 05:54:37 -05:00

|

|

|

self.auth = hs.get_auth()

|

|

|

|

|

self.state_handler = hs.get_state_handler()

|

|

|

|

|

self.config = hs.config

|

2021-09-06 07:17:16 -04:00

|

|

|

self._server_name = hs.hostname

|

2018-03-01 05:54:37 -05:00

|

|

|

|

2020-10-09 07:24:34 -04:00

|

|

|

self.federation_handler = hs.get_federation_handler()

|

|

|

|

|

self.directory_handler = hs.get_directory_handler()

|

|

|

|

|

self.identity_handler = hs.get_identity_handler()

|

2019-02-20 02:47:31 -05:00

|

|

|

self.registration_handler = hs.get_registration_handler()

|

2017-08-25 09:34:56 -04:00

|

|

|

self.profile_handler = hs.get_profile_handler()

|

2019-01-18 10:27:11 -05:00

|

|

|

self.event_creation_handler = hs.get_event_creation_handler()

|

2021-01-18 10:47:59 -05:00

|

|

|

self.account_data_handler = hs.get_account_data_handler()

|

2021-04-23 07:05:51 -04:00

|

|

|

self.event_auth_handler = hs.get_event_auth_handler()

|

2023-07-31 05:58:03 -04:00

|

|

|

self._worker_lock_handler = hs.get_worker_locks_handler()

|

2016-03-31 08:08:45 -04:00

|

|

|

|

2021-08-23 11:25:33 -04:00

|

|

|

self.member_linearizer: Linearizer = Linearizer(name="member")

|

2022-02-16 06:16:48 -05:00

|

|

|

self.member_as_limiter = Linearizer(max_count=10, name="member_as_limiter")

|

2016-04-06 10:44:22 -04:00

|

|

|

|

2016-03-31 08:08:45 -04:00

|

|

|

self.clock = hs.get_clock()

|

2023-04-17 20:57:40 -04:00

|

|

|

self._spam_checker_module_callbacks = hs.get_module_api_callbacks().spam_checker

|

2023-05-04 10:18:22 -04:00

|

|

|

self._third_party_event_rules = (

|

|

|

|

|

hs.get_module_api_callbacks().third_party_event_rules

|

|

|

|

|

)

|

2021-09-24 07:25:21 -04:00

|

|

|

self._server_notices_mxid = self.config.servernotices.server_notices_mxid

|

2021-10-04 07:18:54 -04:00

|

|

|

self._enable_lookup = hs.config.registration.enable_3pid_lookup

|

2021-09-29 06:44:15 -04:00

|

|

|

self.allow_per_room_profiles = self.config.server.allow_per_room_profiles

|

2016-03-31 08:08:45 -04:00

|

|

|

|

2020-07-31 09:34:42 -04:00

|

|

|

self._join_rate_limiter_local = Ratelimiter(

|

2021-03-30 07:06:09 -04:00

|

|

|

store=self.store,

|

2020-07-31 09:34:42 -04:00

|

|

|

clock=self.clock,

|

2023-08-29 19:39:39 -04:00

|

|

|

cfg=hs.config.ratelimiting.rc_joins_local,

|

2020-07-31 09:34:42 -04:00

|

|

|

)

|

2022-07-19 07:45:17 -04:00

|

|

|

# Tracks joins from local users to rooms this server isn't a member of.

|

|

|

|

|

# I.e. joins this server makes by requesting /make_join /send_join from

|

|

|

|

|

# another server.

|

2020-07-31 09:34:42 -04:00

|

|

|

self._join_rate_limiter_remote = Ratelimiter(

|

2021-03-30 07:06:09 -04:00

|

|

|

store=self.store,

|

2020-07-31 09:34:42 -04:00

|

|

|

clock=self.clock,

|

2023-08-29 19:39:39 -04:00

|

|

|

cfg=hs.config.ratelimiting.rc_joins_remote,

|

2020-07-31 09:34:42 -04:00

|

|

|

)

|

2022-07-19 07:45:17 -04:00

|

|

|

# TODO: find a better place to keep this Ratelimiter.

|

|

|

|

|

# It needs to be

|

|

|

|

|

# - written to by event persistence code

|

|

|

|

|

# - written to by something which can snoop on replication streams

|

|

|

|

|

# - read by the RoomMemberHandler to rate limit joins from local users

|

|

|

|

|

# - read by the FederationServer to rate limit make_joins and send_joins from

|

|

|

|

|

# other homeservers

|

|

|

|

|

# I wonder if a homeserver-wide collection of rate limiters might be cleaner?

|

|

|

|

|

self._join_rate_per_room_limiter = Ratelimiter(

|

|

|

|

|

store=self.store,

|

|

|

|

|

clock=self.clock,

|

2023-08-29 19:39:39 -04:00

|

|

|

cfg=hs.config.ratelimiting.rc_joins_per_room,

|

2022-07-19 07:45:17 -04:00

|

|

|

)

|

2020-07-31 09:34:42 -04:00

|

|

|

|

2022-06-30 05:44:47 -04:00

|

|

|

# Ratelimiter for invites, keyed by room (across all issuers, all

|

|

|

|

|

# recipients).

|

2021-01-29 11:38:29 -05:00

|

|

|

self._invites_per_room_limiter = Ratelimiter(

|

2021-03-30 07:06:09 -04:00

|

|

|

store=self.store,

|

2021-01-29 11:38:29 -05:00

|

|

|

clock=self.clock,

|

2023-08-29 19:39:39 -04:00

|

|

|

cfg=hs.config.ratelimiting.rc_invites_per_room,

|

2021-01-29 11:38:29 -05:00

|

|

|

)

|

2022-06-30 05:44:47 -04:00

|

|

|

|

|

|

|

|

# Ratelimiter for invites, keyed by recipient (across all rooms, all

|

|

|

|

|

# issuers).

|

|

|

|

|

self._invites_per_recipient_limiter = Ratelimiter(

|

2021-03-30 07:06:09 -04:00

|

|

|

store=self.store,

|

2021-01-29 11:38:29 -05:00

|

|

|

clock=self.clock,

|

2023-08-29 19:39:39 -04:00

|

|

|

cfg=hs.config.ratelimiting.rc_invites_per_user,

|

2021-01-29 11:38:29 -05:00

|

|

|

)

|

|

|

|

|

|

2022-06-30 05:44:47 -04:00

|

|

|

# Ratelimiter for invites, keyed by issuer (across all rooms, all

|

|

|

|

|

# recipients).

|

|

|

|

|

self._invites_per_issuer_limiter = Ratelimiter(

|

|

|

|

|

store=self.store,

|

|

|

|

|

clock=self.clock,

|

2023-08-29 19:39:39 -04:00

|

|

|

cfg=hs.config.ratelimiting.rc_invites_per_issuer,

|

2022-06-30 05:44:47 -04:00

|

|

|

)

|

|

|

|

|

|

2022-02-03 08:28:15 -05:00

|

|

|

self._third_party_invite_limiter = Ratelimiter(

|

|

|

|

|

store=self.store,

|

|

|

|

|

clock=self.clock,

|

2023-08-29 19:39:39 -04:00

|

|

|

cfg=hs.config.ratelimiting.rc_third_party_invite,

|

2022-02-03 08:28:15 -05:00

|

|

|

)

|

|

|

|

|

|

2021-10-08 07:44:43 -04:00

|

|

|

self.request_ratelimiter = hs.get_request_ratelimiter()

|

2022-07-19 07:45:17 -04:00

|

|

|

hs.get_notifier().add_new_join_in_room_callback(self._on_user_joined_room)

|

|

|

|

|

|

2023-09-15 09:37:44 -04:00

|

|

|

self._forgotten_room_retention_period = (

|

|

|

|

|

hs.config.server.forgotten_room_retention_period

|

|

|

|

|

)

|

|

|

|

|

|

2022-07-19 07:45:17 -04:00

|

|

|

def _on_user_joined_room(self, event_id: str, room_id: str) -> None:

|

|

|

|

|

"""Notify the rate limiter that a room join has occurred.

|

|

|

|

|

|

|

|

|

|

Use this to inform the RoomMemberHandler about joins that have either

|

|

|

|

|

- taken place on another homeserver, or

|

|

|

|

|

- on another worker in this homeserver.

|

|

|

|

|

Joins actioned by this worker should use the usual `ratelimit` method, which

|

|

|

|

|

checks the limit and increments the counter in one go.

|

|

|

|

|

"""

|

|

|

|

|

self._join_rate_per_room_limiter.record_action(requester=None, key=room_id)

|

2019-04-26 13:06:25 -04:00

|

|

|

|

2018-03-13 09:49:13 -04:00

|

|

|

@abc.abstractmethod

|

2020-05-15 15:05:25 -04:00

|

|

|

async def _remote_join(

|

|

|

|

|

self,

|

|

|

|

|

requester: Requester,

|

|

|

|

|

remote_room_hosts: List[str],

|

|

|

|

|

room_id: str,

|

|

|

|

|

user: UserID,

|

|

|

|

|

content: dict,

|

2020-05-22 09:21:54 -04:00

|

|

|

) -> Tuple[str, int]:

|

2018-03-13 09:49:13 -04:00

|

|

|

"""Try and join a room that this server is not in

|

|

|

|

|

|

|

|

|

|

Args:

|

2022-08-22 09:17:59 -04:00

|

|

|

requester: The user making the request, according to the access token.

|

2020-05-15 15:05:25 -04:00

|

|

|

remote_room_hosts: List of servers that can be used to join via.

|

|

|

|

|

room_id: Room that we are trying to join

|

|

|

|

|

user: User who is trying to join

|

|

|

|

|

content: A dict that should be used as the content of the join event.

|

2023-02-10 18:31:05 -05:00

|

|

|

|

|

|

|

|

Raises:

|

|

|

|

|

NoKnownServersError: if remote_room_hosts does not contain a server joined to

|

|

|

|

|

the room.

|

2018-03-13 09:49:13 -04:00

|

|

|

"""

|

|

|

|

|

raise NotImplementedError()

|

|

|

|

|

|

2021-06-09 14:39:51 -04:00

|

|

|

@abc.abstractmethod

|

|

|

|

|

async def remote_knock(

|

|

|

|

|

self,

|

2023-03-02 12:59:53 -05:00

|

|

|

requester: Requester,

|

2021-06-09 14:39:51 -04:00

|

|

|

remote_room_hosts: List[str],

|

|

|

|

|

room_id: str,

|

|

|

|

|

user: UserID,

|

|

|

|

|

content: dict,

|

|

|

|

|

) -> Tuple[str, int]:

|

|

|

|

|

"""Try and knock on a room that this server is not in

|

|

|

|

|

|

|

|

|

|

Args:

|

|

|

|

|

remote_room_hosts: List of servers that can be used to knock via.

|

|

|

|

|

room_id: Room that we are trying to knock on.

|

|

|

|

|

user: User who is trying to knock.

|

|

|

|

|

content: A dict that should be used as the content of the knock event.

|

|

|

|

|

"""

|

|

|

|

|

raise NotImplementedError()

|

|

|

|

|

|

2018-03-13 09:49:13 -04:00

|

|

|

@abc.abstractmethod

|

2020-07-09 05:40:19 -04:00

|

|

|

async def remote_reject_invite(

|

2020-05-15 15:05:25 -04:00

|

|

|

self,

|

2020-07-09 05:40:19 -04:00

|

|

|

invite_event_id: str,

|

|

|

|

|

txn_id: Optional[str],

|

2020-05-15 15:05:25 -04:00

|

|

|

requester: Requester,

|

2020-07-09 05:40:19 -04:00

|

|

|

content: JsonDict,

|

2020-07-09 08:01:42 -04:00

|

|

|

) -> Tuple[str, int]:

|

2020-07-09 05:40:19 -04:00

|

|

|

"""

|

|

|

|

|

Rejects an out-of-band invite we have received from a remote server

|

2018-03-13 09:49:13 -04:00

|

|

|

|

|

|

|

|

Args:

|

2020-07-09 05:40:19 -04:00

|

|

|

invite_event_id: ID of the invite to be rejected

|

|

|

|

|

txn_id: optional transaction ID supplied by the client

|

|

|

|

|

requester: user making the rejection request, according to the access token

|

|

|

|

|

content: additional content to include in the rejection event.

|

|

|

|

|

Normally an empty dict.

|

2018-03-13 09:49:13 -04:00

|

|

|

|

|

|

|

|

Returns:

|

2020-07-09 05:40:19 -04:00

|

|

|

event id, stream_id of the leave event

|

2018-03-13 09:49:13 -04:00

|

|

|

"""

|

|

|

|

|

raise NotImplementedError()

|

|

|

|

|

|

2021-06-09 14:39:51 -04:00

|

|

|

@abc.abstractmethod

|

|

|

|

|

async def remote_rescind_knock(

|

|

|

|

|

self,

|

|

|

|

|

knock_event_id: str,

|

|

|

|

|

txn_id: Optional[str],

|

|

|

|

|

requester: Requester,

|

|

|

|

|

content: JsonDict,

|

|

|

|

|

) -> Tuple[str, int]:

|

|

|

|

|

"""Rescind a local knock made on a remote room.

|

|

|

|

|

|

|

|

|

|

Args:

|

|

|

|

|

knock_event_id: The ID of the knock event to rescind.

|

|

|

|

|

txn_id: An optional transaction ID supplied by the client.

|

|

|

|

|

requester: The user making the request, according to the access token.

|

|

|

|

|

content: The content of the generated leave event.

|

|

|

|

|

|

|

|

|

|

Returns:

|

|

|

|

|

A tuple containing (event_id, stream_id of the leave event).

|

|

|

|

|

"""

|

|

|

|

|

raise NotImplementedError()

|

|

|

|

|

|

2018-03-13 12:00:26 -04:00

|

|

|

@abc.abstractmethod

|

2020-05-15 15:05:25 -04:00

|

|

|

async def _user_left_room(self, target: UserID, room_id: str) -> None:

|

2018-03-13 12:00:26 -04:00

|

|

|

"""Notifies distributor on master process that the user has left the

|

|

|

|

|

room.

|

|

|

|

|

|

|

|

|

|

Args:

|

2020-05-15 15:05:25 -04:00

|

|

|

target

|

|

|

|

|

room_id

|

2018-03-13 12:00:26 -04:00

|

|

|

"""

|

|

|

|

|

raise NotImplementedError()

|

2016-03-31 08:08:45 -04:00

|

|

|

|

2023-09-15 09:37:44 -04:00

|

|

|

async def forget(

|

|

|

|

|

self, user: UserID, room_id: str, do_not_schedule_purge: bool = False

|

|

|

|

|

) -> None:

|

2023-05-03 07:27:33 -04:00

|

|

|

user_id = user.to_string()

|

|

|

|

|

|

|

|

|

|

member = await self._storage_controllers.state.get_current_state_event(

|

|

|

|

|

room_id=room_id, event_type=EventTypes.Member, state_key=user_id

|

|

|

|

|

)

|

|

|

|

|

membership = member.membership if member else None

|

|

|

|

|

|

|

|

|

|

if membership is not None and membership not in [

|

|

|

|

|

Membership.LEAVE,

|

|

|

|

|

Membership.BAN,

|

|

|

|

|

]:

|

|

|

|

|

raise SynapseError(400, "User %s in room %s" % (user_id, room_id))

|

|

|

|

|

|

|

|

|

|

# In normal case this call is only required if `membership` is not `None`.

|

|

|

|

|

# But: After the last member had left the room, the background update

|

|

|

|

|

# `_background_remove_left_rooms` is deleting rows related to this room from

|

|

|

|

|

# the table `current_state_events` and `get_current_state_events` is `None`.

|

|

|

|

|

await self.store.forget(user_id, room_id)

|

2021-03-17 07:14:39 -04:00

|

|

|

|

2023-09-15 09:37:44 -04:00

|

|

|

# If everyone locally has left the room, then there is no reason for us to keep the

|

|

|

|

|

# room around and we automatically purge room after a little bit

|

|

|

|

|

if (

|

|

|

|

|

not do_not_schedule_purge

|

|

|

|

|

and self._forgotten_room_retention_period

|

|

|

|

|

and await self.store.is_locally_forgotten_room(room_id)

|

|

|

|

|

):

|

|

|

|

|

await self.hs.get_task_scheduler().schedule_task(

|

|

|

|

|

PURGE_ROOM_ACTION_NAME,

|

|

|

|

|

resource_id=room_id,

|

|

|

|

|

timestamp=self.clock.time_msec()

|

|

|

|

|

+ self._forgotten_room_retention_period,

|

|

|

|

|

)

|

|

|

|

|

|

2021-05-12 10:05:28 -04:00

|

|

|

async def ratelimit_multiple_invites(

|

|

|

|

|

self,

|

|

|

|

|

requester: Optional[Requester],

|

|

|

|

|

room_id: Optional[str],

|

|

|

|

|

n_invites: int,

|

|

|

|

|

update: bool = True,

|

2021-09-20 08:56:23 -04:00

|

|

|

) -> None:

|

2021-05-12 10:05:28 -04:00

|

|

|

"""Ratelimit more than one invite sent by the given requester in the given room.

|

|

|

|

|

|

|

|

|

|

Args:

|

|

|

|

|

requester: The requester sending the invites.

|

|

|

|

|

room_id: The room the invites are being sent in.

|

|

|

|

|

n_invites: The amount of invites to ratelimit for.

|

|

|

|

|

update: Whether to update the ratelimiter's cache.

|

|

|

|

|

|

|

|

|

|

Raises:

|

|

|

|

|

LimitExceededError: The requester can't send that many invites in the room.

|

|

|

|

|

"""

|

|

|

|

|

await self._invites_per_room_limiter.ratelimit(

|

|

|

|

|

requester,

|

|

|

|

|

room_id,

|

|

|

|

|

update=update,

|

|

|

|

|

n_actions=n_invites,

|

|

|

|

|

)

|

|

|

|

|

|

2021-03-30 07:06:09 -04:00

|

|

|

async def ratelimit_invite(

|

|

|

|

|

self,

|

|

|

|

|

requester: Optional[Requester],

|

|

|

|

|

room_id: Optional[str],

|

|

|

|

|

invitee_user_id: str,

|

2021-09-20 08:56:23 -04:00

|

|

|

) -> None:

|

2021-01-29 11:38:29 -05:00

|

|

|

"""Ratelimit invites by room and by target user.

|

2021-02-03 05:17:37 -05:00

|

|

|

|

|

|

|

|

If room ID is missing then we just rate limit by target user.

|

2021-01-29 11:38:29 -05:00

|

|

|

"""

|

2021-02-03 05:17:37 -05:00

|

|

|

if room_id:

|

2021-03-30 07:06:09 -04:00

|

|

|

await self._invites_per_room_limiter.ratelimit(requester, room_id)

|

2021-02-03 05:17:37 -05:00

|

|

|

|

2022-06-30 05:44:47 -04:00

|

|

|

await self._invites_per_recipient_limiter.ratelimit(requester, invitee_user_id)

|

|

|

|

|

if requester is not None:

|

|

|

|

|

await self._invites_per_issuer_limiter.ratelimit(requester)

|

2021-01-29 11:38:29 -05:00

|

|

|

|

2020-05-01 10:15:36 -04:00

|

|

|

async def _local_membership_update(

|

2016-04-01 11:17:32 -04:00

|

|

|

self,

|

2020-05-15 15:05:25 -04:00

|

|

|

requester: Requester,

|

|

|

|

|

target: UserID,

|

|

|

|

|

room_id: str,

|

|

|

|

|

membership: str,

|

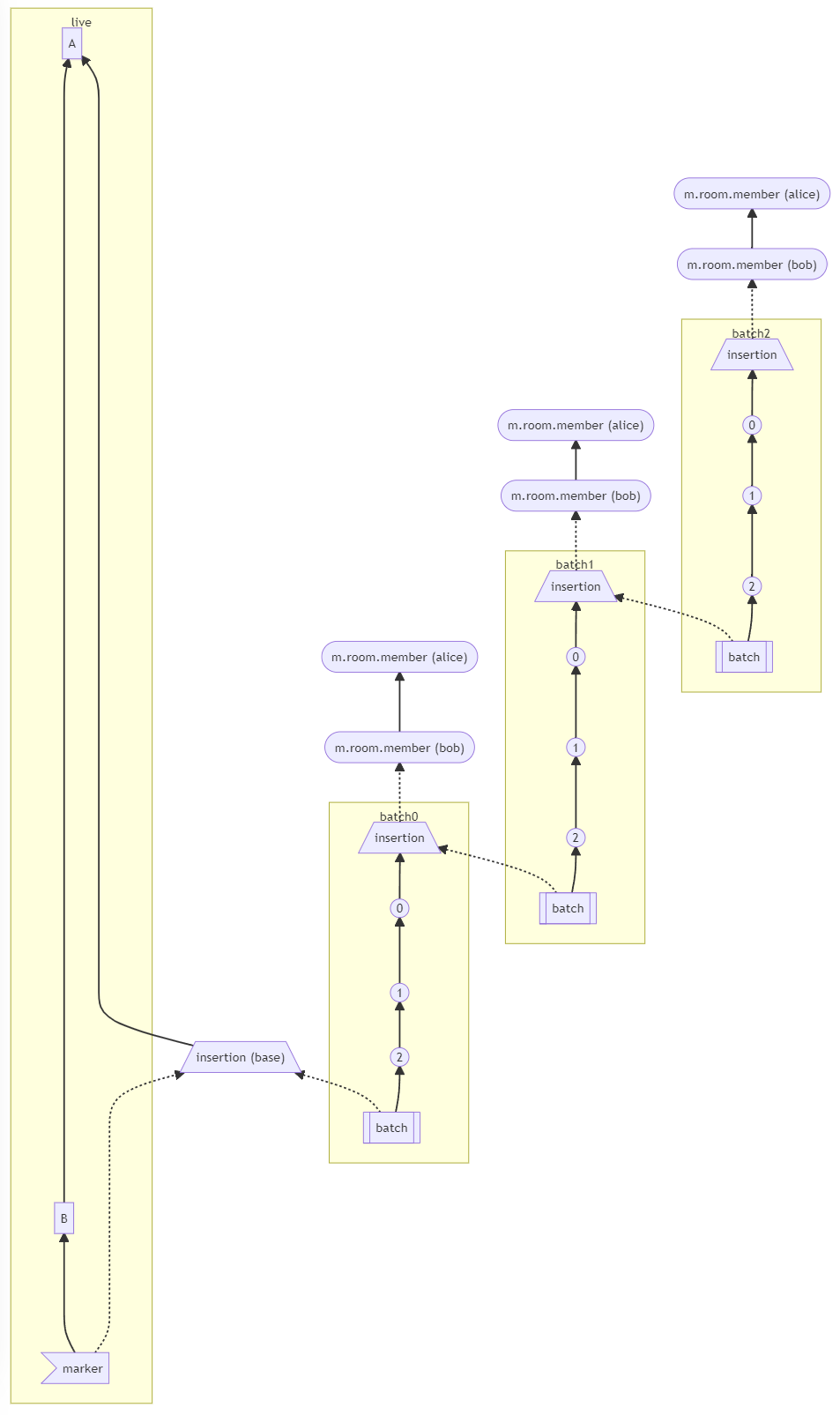

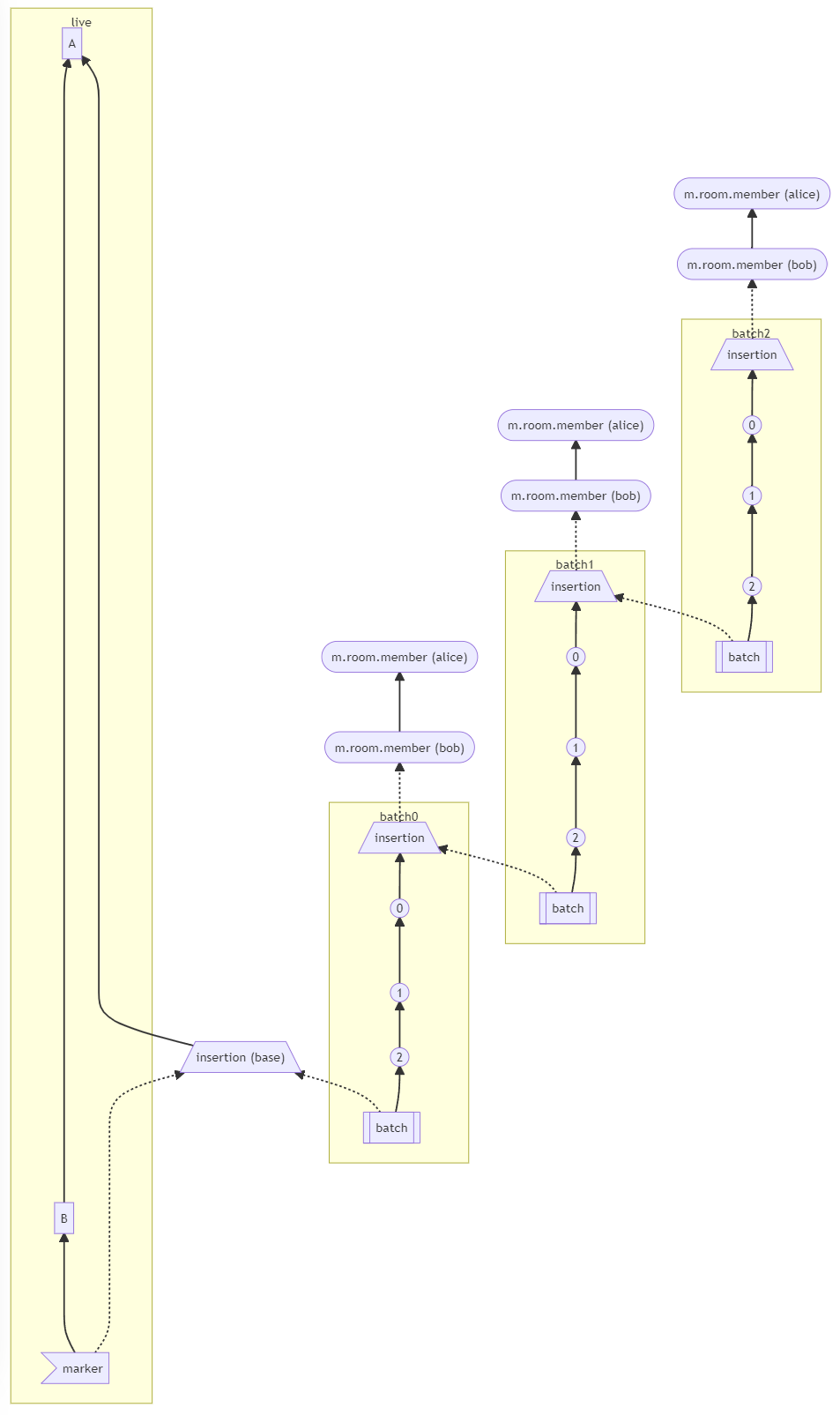

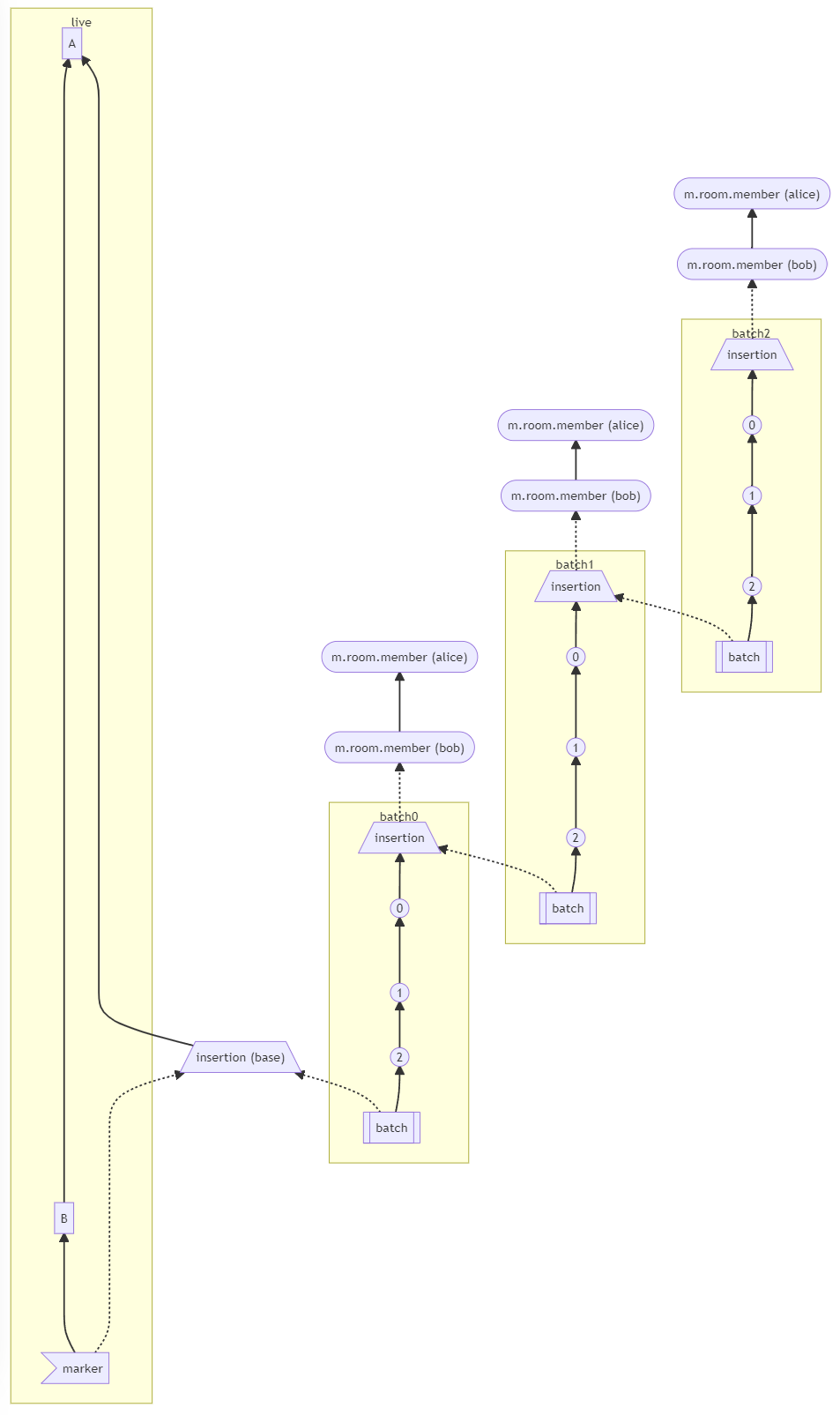

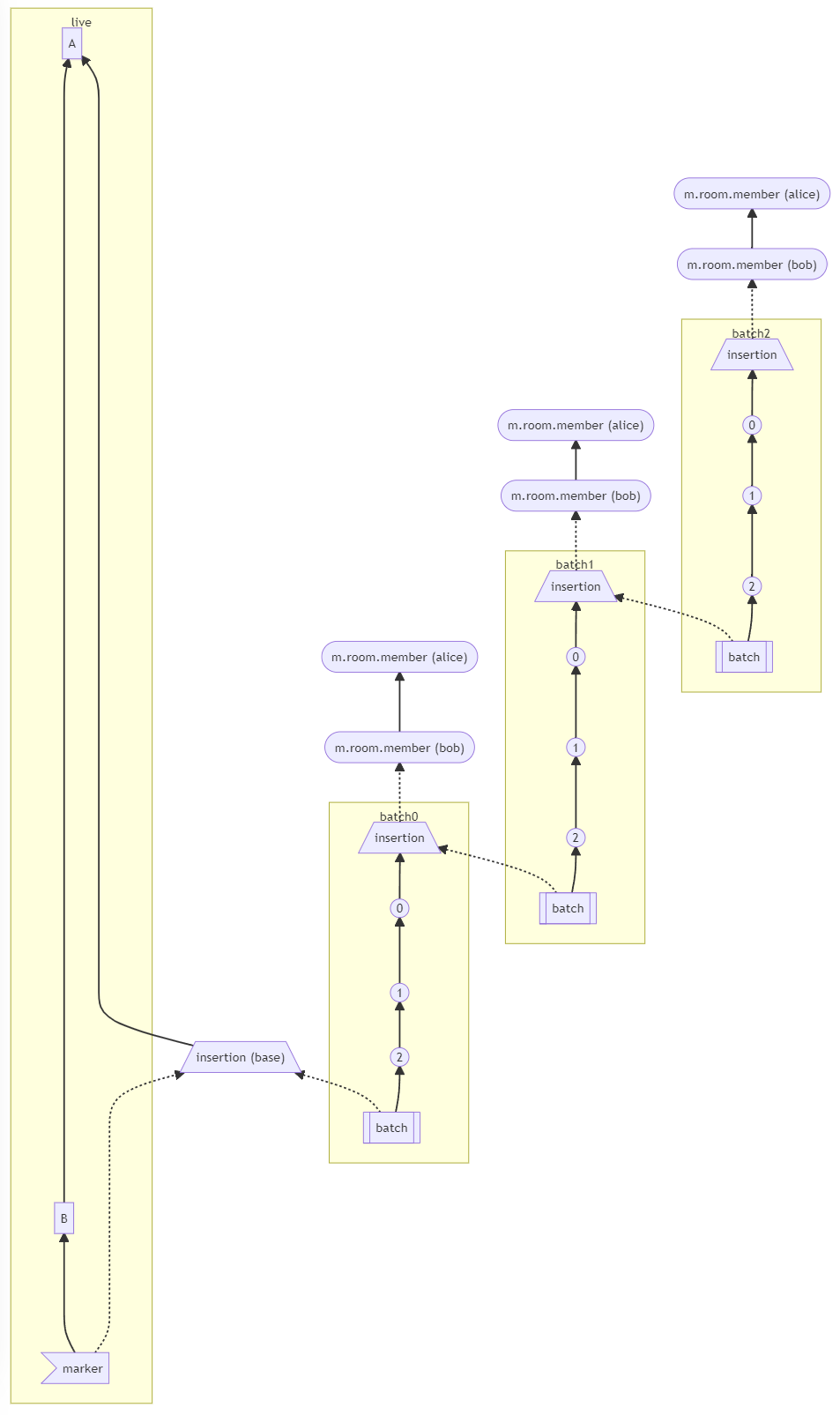

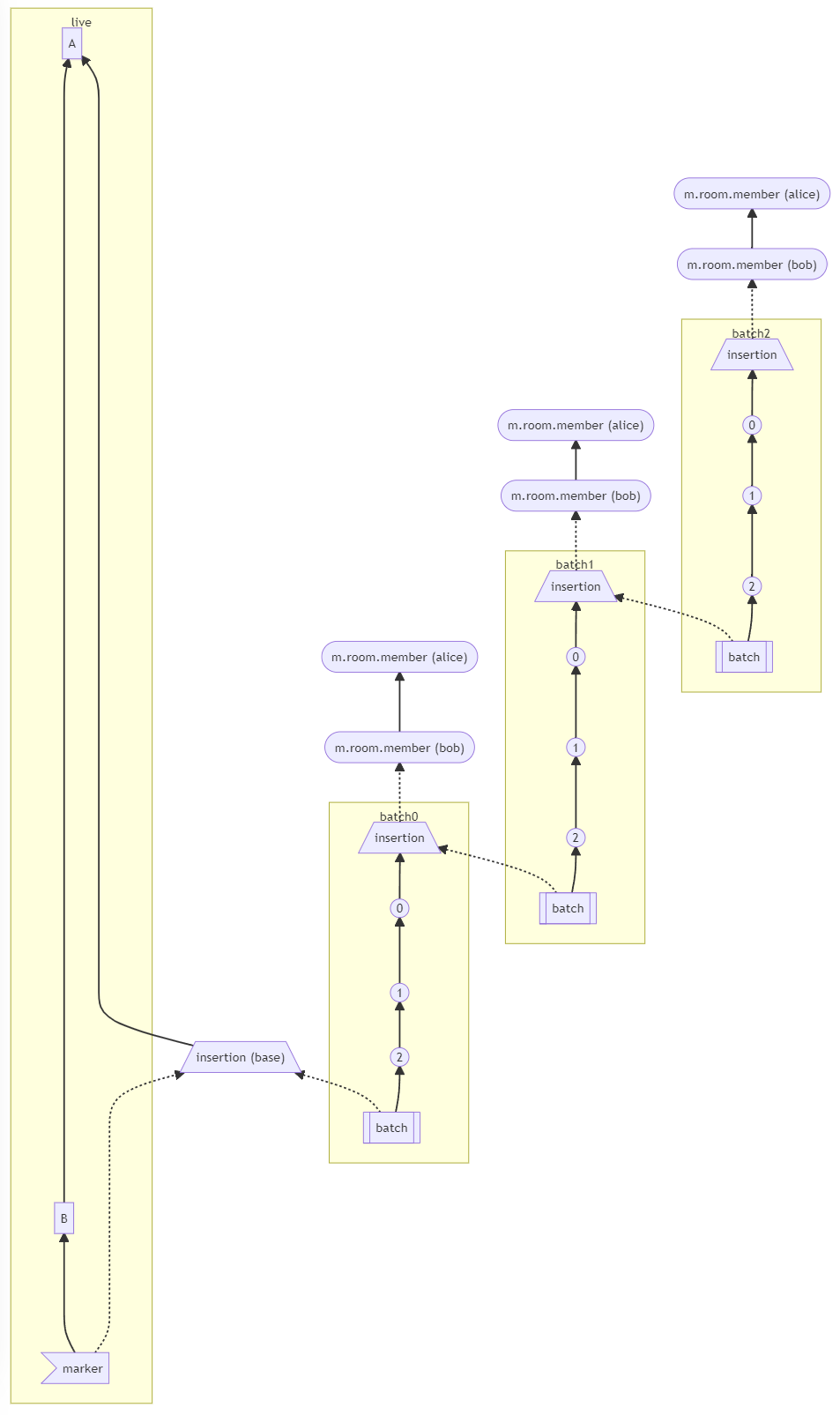

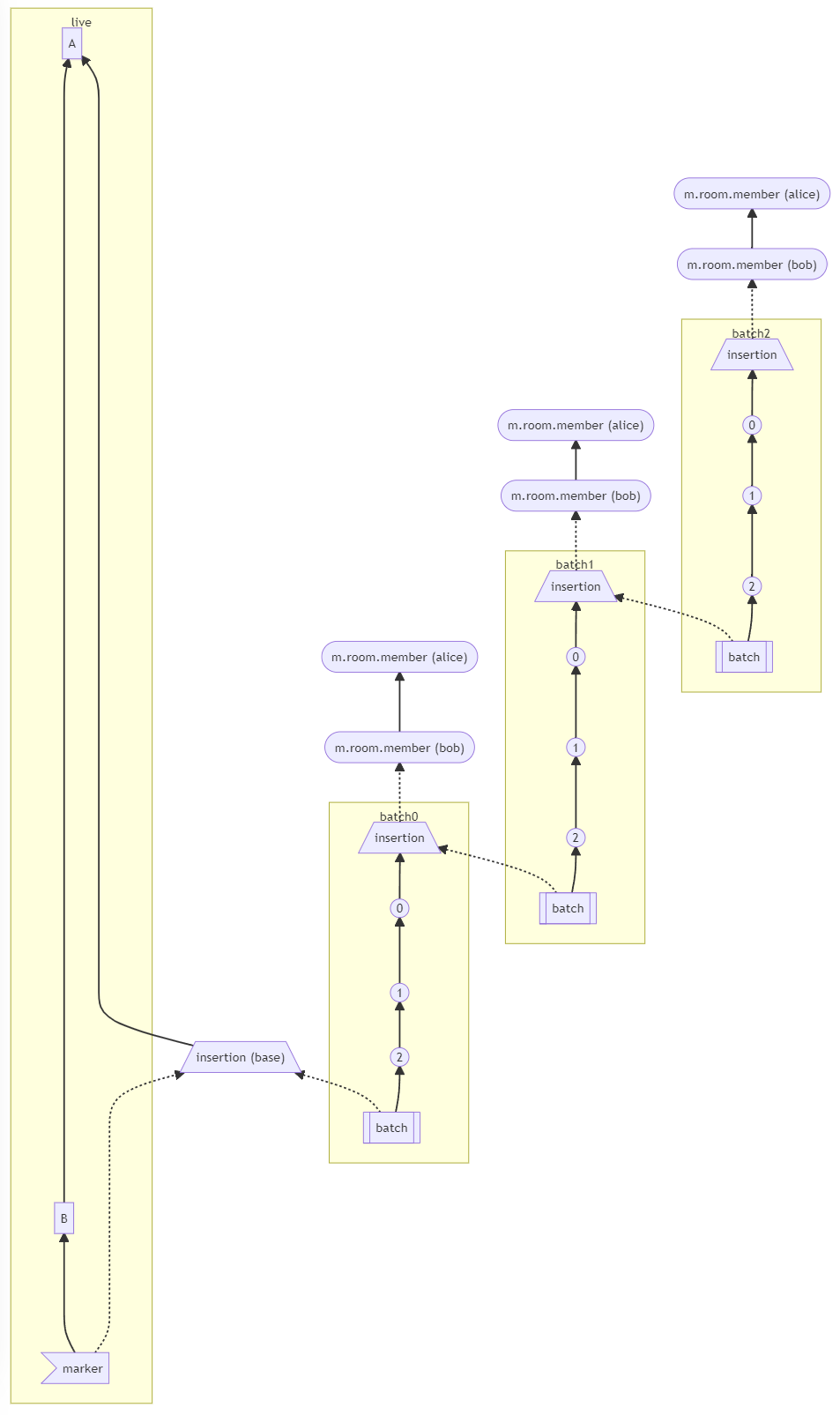

Fix historical messages backfilling in random order on remote homeservers (MSC2716) (#11114)

Fix https://github.com/matrix-org/synapse/issues/11091

Fix https://github.com/matrix-org/synapse/issues/10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`)

1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`)

- Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)).

- Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort.

- This will be even more the case when we add the [MSC3030 jump to date API endpoint](https://github.com/matrix-org/matrix-doc/pull/3030) so the static archives can navigate and jump to a certain date.

- We're solving this in the future by switching to [online topological ordering](https://github.com/matrix-org/gomatrixserverlib/issues/187) and [chunking](https://github.com/matrix-org/synapse/issues/3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking

2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order.

3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes https://github.com/matrix-org/synapse/issues/11091 and https://github.com/matrix-org/synapse/issues/10764

4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls.

- Related discussion, https://github.com/matrix-org/synapse/pull/11114#discussion_r741514793

Before | After

--- | ---

|

#### Why aren't we sorting topologically when receiving backfill events?

> The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) which could change whatever implementation anyway.

>

> As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering.

>

> -- https://github.com/matrix-org/synapse/pull/11114#discussion_r741517138

See https://github.com/matrix-org/synapse/pull/11114#discussion_r739610091 for the technical difficulties

2022-02-07 16:54:13 -05:00

|

|

|

allow_no_prev_events: bool = False,

|

|

|

|

|

prev_event_ids: Optional[List[str]] = None,

|

2022-03-25 10:21:06 -04:00

|

|

|

state_event_ids: Optional[List[str]] = None,

|

2022-07-13 14:32:46 -04:00

|

|

|

depth: Optional[int] = None,

|

2020-05-15 15:05:25 -04:00

|

|

|

txn_id: Optional[str] = None,

|

|

|

|

|

ratelimit: bool = True,

|

|

|

|

|

content: Optional[dict] = None,

|

|

|

|

|

require_consent: bool = True,

|

2021-06-22 05:02:53 -04:00

|

|

|

outlier: bool = False,

|

2022-10-03 09:30:45 -04:00

|

|

|

origin_server_ts: Optional[int] = None,

|

2020-05-22 09:21:54 -04:00

|

|

|

) -> Tuple[str, int]:

|

2021-06-22 05:02:53 -04:00

|

|

|

"""

|

|

|

|

|

Internal membership update function to get an existing event or create

|

|

|

|

|

and persist a new event for the new membership change.

|

|

|

|

|

|

|

|

|

|

Args:

|

2023-10-06 13:31:52 -04:00

|

|

|

requester: User requesting the membership change, i.e. the sender of the

|

|

|

|

|

desired membership event.

|

|

|

|

|

target: Use whose membership should change, i.e. the state_key of the

|

|

|

|

|

desired membership event.

|

2021-06-22 05:02:53 -04:00

|

|

|

room_id:

|

|

|

|

|

membership:

|

|

|

|

|

|

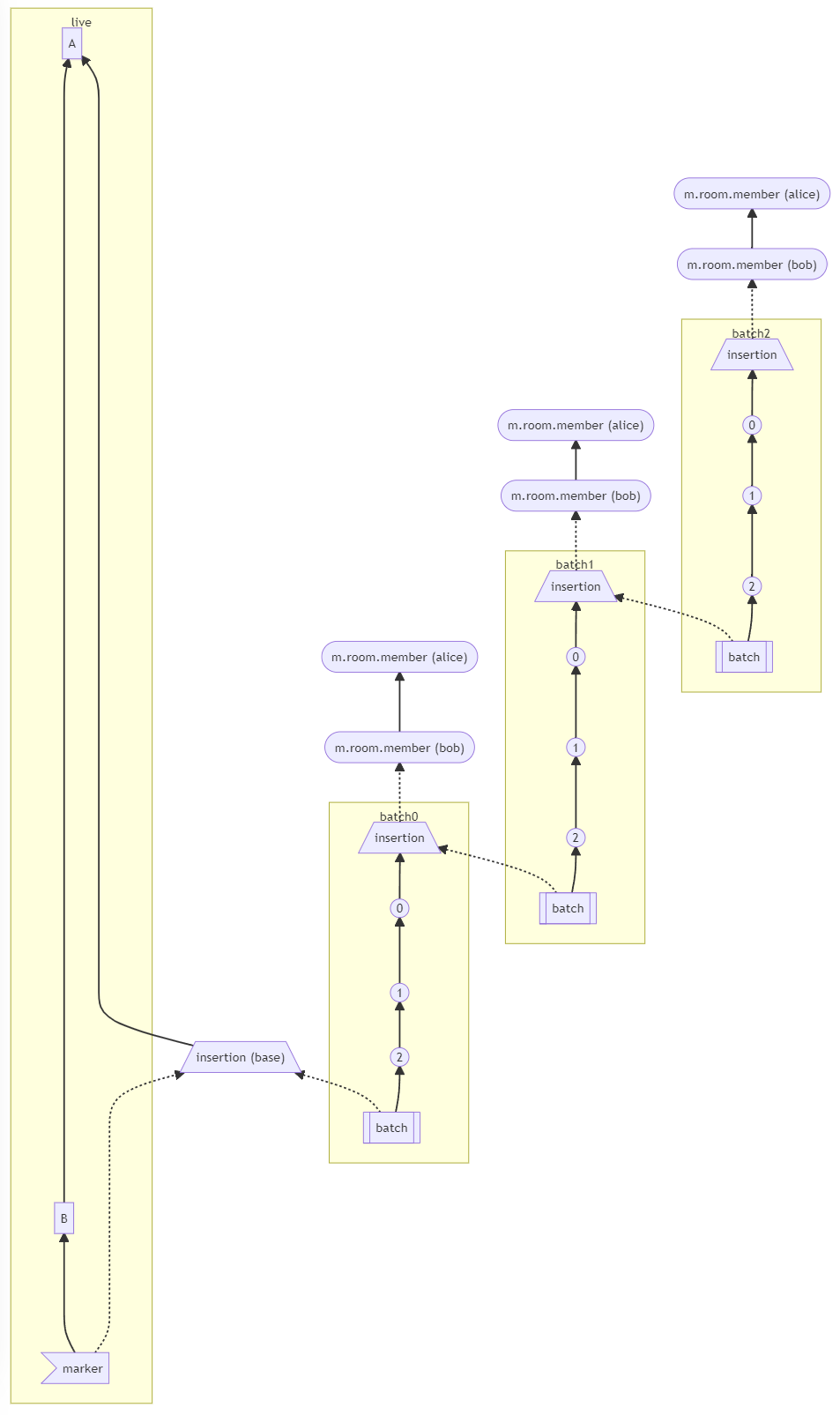

Fix historical messages backfilling in random order on remote homeservers (MSC2716) (#11114)

Fix https://github.com/matrix-org/synapse/issues/11091

Fix https://github.com/matrix-org/synapse/issues/10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`)

1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`)

- Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)).

- Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort.

- This will be even more the case when we add the [MSC3030 jump to date API endpoint](https://github.com/matrix-org/matrix-doc/pull/3030) so the static archives can navigate and jump to a certain date.

- We're solving this in the future by switching to [online topological ordering](https://github.com/matrix-org/gomatrixserverlib/issues/187) and [chunking](https://github.com/matrix-org/synapse/issues/3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking

2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order.

3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes https://github.com/matrix-org/synapse/issues/11091 and https://github.com/matrix-org/synapse/issues/10764

4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls.

- Related discussion, https://github.com/matrix-org/synapse/pull/11114#discussion_r741514793

Before | After

--- | ---

|

#### Why aren't we sorting topologically when receiving backfill events?

> The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) which could change whatever implementation anyway.

>

> As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering.

>

> -- https://github.com/matrix-org/synapse/pull/11114#discussion_r741517138

See https://github.com/matrix-org/synapse/pull/11114#discussion_r739610091 for the technical difficulties

2022-02-07 16:54:13 -05:00

|

|

|

allow_no_prev_events: Whether to allow this event to be created an empty

|

|

|

|

|

list of prev_events. Normally this is prohibited just because most

|

|

|

|

|

events should have a prev_event and we should only use this in special

|

2023-06-16 15:12:24 -04:00

|

|

|

cases (previously useful for MSC2716).

|

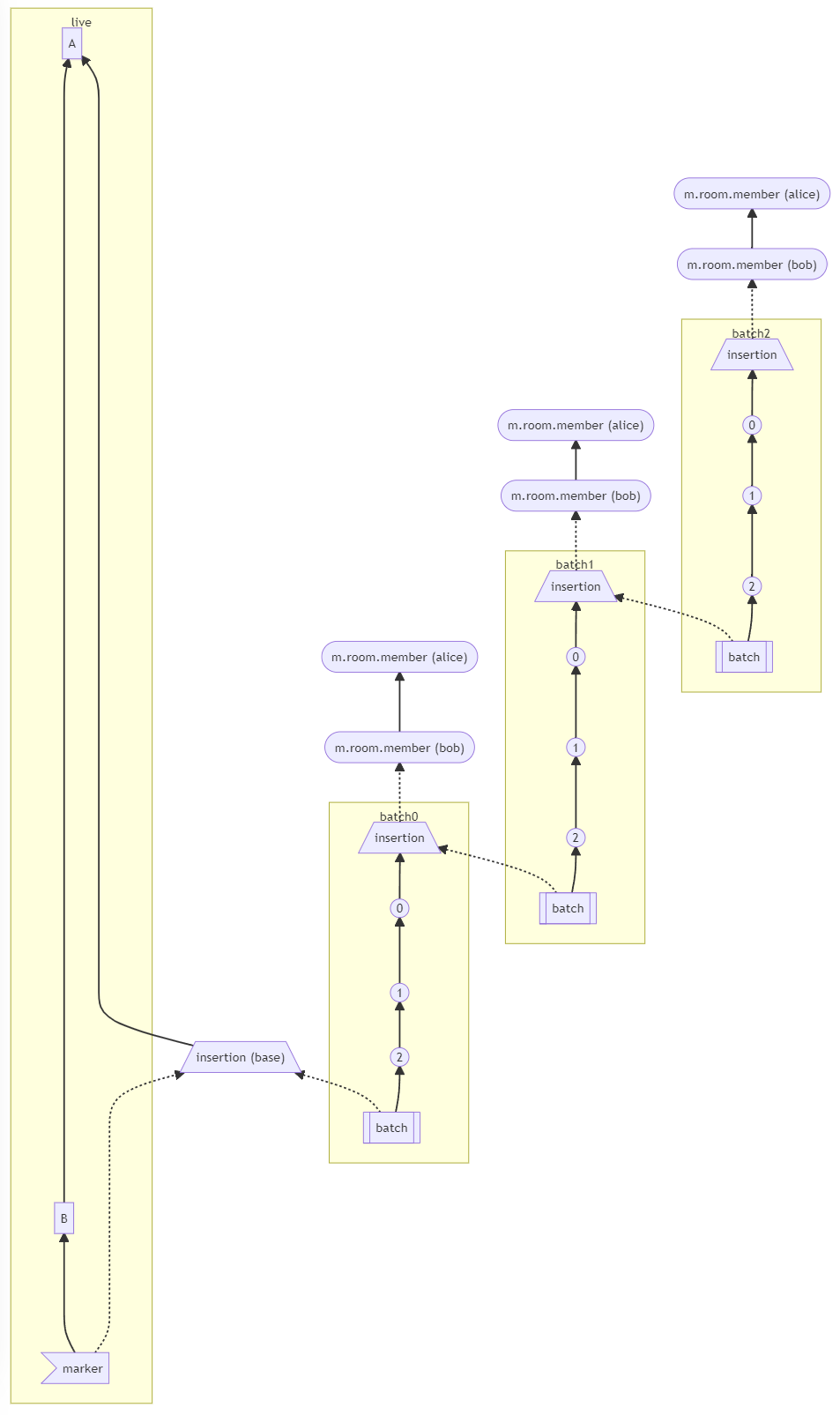

Fix historical messages backfilling in random order on remote homeservers (MSC2716) (#11114)

Fix https://github.com/matrix-org/synapse/issues/11091

Fix https://github.com/matrix-org/synapse/issues/10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`)

1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`)

- Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)).

- Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort.

- This will be even more the case when we add the [MSC3030 jump to date API endpoint](https://github.com/matrix-org/matrix-doc/pull/3030) so the static archives can navigate and jump to a certain date.

- We're solving this in the future by switching to [online topological ordering](https://github.com/matrix-org/gomatrixserverlib/issues/187) and [chunking](https://github.com/matrix-org/synapse/issues/3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking

2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order.

3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes https://github.com/matrix-org/synapse/issues/11091 and https://github.com/matrix-org/synapse/issues/10764

4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls.

- Related discussion, https://github.com/matrix-org/synapse/pull/11114#discussion_r741514793

Before | After

--- | ---

|

#### Why aren't we sorting topologically when receiving backfill events?

> The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) which could change whatever implementation anyway.

>

> As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering.

>

> -- https://github.com/matrix-org/synapse/pull/11114#discussion_r741517138

See https://github.com/matrix-org/synapse/pull/11114#discussion_r739610091 for the technical difficulties

2022-02-07 16:54:13 -05:00

|

|

|

prev_event_ids: The event IDs to use as the prev events

|

2022-03-25 10:21:06 -04:00

|

|

|

state_event_ids:

|

2023-06-16 15:12:24 -04:00

|

|

|

The full state at a given event. This was previously used particularly

|

|

|

|

|

by the MSC2716 /batch_send endpoint. This should normally be left as

|

|

|

|

|

None, which will cause the auth_event_ids to be calculated based on the

|

|

|

|

|

room state at the prev_events.

|

2022-07-13 14:32:46 -04:00

|

|

|

depth: Override the depth used to order the event in the DAG.

|

|

|

|

|

Should normally be set to None, which will cause the depth to be calculated

|

|

|

|

|

based on the prev_events.

|

2021-06-22 05:02:53 -04:00

|

|

|

|

|

|

|

|

txn_id:

|

|

|

|

|

ratelimit:

|

|

|

|

|

content:

|

|

|

|

|

require_consent:

|

|

|

|

|

|

|

|

|

|

outlier: Indicates whether the event is an `outlier`, i.e. if

|

|

|

|

|

it's from an arbitrary point and floating in the DAG as

|

|

|

|

|

opposed to being inline with the current DAG.

|

2022-10-03 09:30:45 -04:00

|

|

|

origin_server_ts: The origin_server_ts to use if a new event is created. Uses

|

|

|

|

|

the current timestamp if set to None.

|

2021-06-22 05:02:53 -04:00

|

|

|

|

|

|

|

|

Returns:

|

|

|

|

|

Tuple of event ID and stream ordering position

|

|

|

|

|

"""

|

2019-01-18 12:03:09 -05:00

|

|

|

user_id = target.to_string()

|

|

|

|

|

|

2016-08-23 11:32:04 -04:00

|

|

|

if content is None:

|

|

|

|

|

content = {}

|

2016-04-01 11:17:32 -04:00

|

|

|

|

2016-08-23 11:32:04 -04:00

|

|

|

content["membership"] = membership

|

2016-04-01 11:17:32 -04:00

|

|

|

if requester.is_guest:

|

|

|

|

|

content["kind"] = "guest"

|

|

|

|

|

|

2020-10-13 07:07:56 -04:00

|

|

|

# Check if we already have an event with a matching transaction ID. (We

|

|

|

|

|

# do this check just before we persist an event as well, but may as well

|

|

|

|

|

# do it up front for efficiency.)

|

2023-04-25 04:37:09 -04:00

|

|

|

if txn_id:

|

2023-07-31 08:44:45 -04:00

|

|

|

existing_event_id = (

|

|

|

|

|

await self.event_creation_handler.get_event_id_from_transaction(

|

|

|

|

|

requester, txn_id, room_id

|

2023-04-25 04:37:09 -04:00

|

|

|

)

|

2023-07-31 08:44:45 -04:00

|

|

|

)

|

2020-10-13 07:07:56 -04:00

|

|

|

if existing_event_id:

|

|

|

|

|

event_pos = await self.store.get_position_for_event(existing_event_id)

|

|

|

|

|

return existing_event_id, event_pos.stream

|

|

|

|

|

|

2022-12-15 11:04:23 -05:00

|

|

|

# Try several times, it could fail with PartialStateConflictError,

|

|

|

|

|

# in handle_new_client_event, cf comment in except block.

|

|

|

|

|

max_retries = 5

|

|

|

|

|

for i in range(max_retries):

|

|

|

|

|

try:

|

2023-02-24 16:15:29 -05:00

|

|

|

(

|

|

|

|

|

event,

|

|

|

|

|

unpersisted_context,

|

|

|

|

|

) = await self.event_creation_handler.create_event(

|

2022-12-15 11:04:23 -05:00

|

|

|

requester,

|

|

|

|

|

{

|

|

|

|

|

"type": EventTypes.Member,

|

|

|

|

|

"content": content,

|

|

|

|

|

"room_id": room_id,

|

|

|

|

|

"sender": requester.user.to_string(),

|

|

|

|

|

"state_key": user_id,

|

|

|

|

|

# For backwards compatibility:

|

|

|

|

|

"membership": membership,

|

|

|

|

|

"origin_server_ts": origin_server_ts,

|

|

|

|

|

},

|

|

|

|

|

txn_id=txn_id,

|

|

|

|

|

allow_no_prev_events=allow_no_prev_events,

|

|

|

|

|

prev_event_ids=prev_event_ids,

|

|

|

|

|

state_event_ids=state_event_ids,

|

|

|

|

|

depth=depth,

|

|

|

|

|

require_consent=require_consent,

|

|

|

|

|

outlier=outlier,

|

|

|

|

|

)

|

2023-02-24 16:15:29 -05:00

|

|

|

context = await unpersisted_context.persist(event)

|

2022-12-15 11:04:23 -05:00

|

|

|

prev_state_ids = await context.get_prev_state_ids(

|

2023-07-20 05:46:37 -04:00

|

|

|

StateFilter.from_types([(EventTypes.Member, user_id)])

|

2022-12-15 11:04:23 -05:00

|

|

|

)

|

2016-04-01 11:17:32 -04:00

|

|

|

|

2022-12-15 11:04:23 -05:00

|

|

|

prev_member_event_id = prev_state_ids.get(

|

|

|

|

|

(EventTypes.Member, user_id), None

|

2022-07-19 07:45:17 -04:00

|

|

|

)

|

2020-08-24 13:06:04 -04:00

|

|

|

|

2022-12-15 11:04:23 -05:00

|

|

|

with opentracing.start_active_span("handle_new_client_event"):

|

|

|

|

|

result_event = (

|

|

|

|

|

await self.event_creation_handler.handle_new_client_event(

|

|

|

|

|

requester,

|

|

|

|

|

events_and_context=[(event, context)],

|

|

|

|

|

extra_users=[target],

|

|

|

|

|

ratelimit=ratelimit,

|

|

|

|

|

)

|

|

|

|

|

)

|

|

|

|

|

|

|

|

|

|

if event.membership == Membership.LEAVE:

|

|

|

|

|

if prev_member_event_id:

|

|

|

|

|

prev_member_event = await self.store.get_event(

|

|

|

|

|

prev_member_event_id

|

|

|

|

|

)

|

|

|

|

|

if prev_member_event.membership == Membership.JOIN:

|

|

|

|

|

await self._user_left_room(target, room_id)

|

|

|

|

|

|

|

|

|

|

break

|

|

|

|

|

except PartialStateConflictError as e:

|

|

|

|

|

# Persisting couldn't happen because the room got un-partial stated

|

|

|

|

|

# in the meantime and context needs to be recomputed, so let's do so.

|

|

|

|

|

if i == max_retries - 1:

|

|

|

|

|

raise e

|

2016-04-01 11:17:32 -04:00

|

|

|

|

2020-10-02 11:45:41 -04:00

|

|

|

# we know it was persisted, so should have a stream ordering

|

|

|

|

|

assert result_event.internal_metadata.stream_ordering

|

|

|

|

|

return result_event.event_id, result_event.internal_metadata.stream_ordering

|

2017-01-09 13:25:13 -05:00

|

|

|

|

2020-05-15 09:32:13 -04:00

|

|

|

async def copy_room_tags_and_direct_to_room(

|

2021-09-20 08:56:23 -04:00

|

|

|

self, old_room_id: str, new_room_id: str, user_id: str

|

2020-05-15 15:05:25 -04:00

|

|

|

) -> None:

|

2019-01-25 06:09:34 -05:00

|

|

|

"""Copies the tags and direct room state from one room to another.

|

|

|

|

|

|

|

|

|

|

Args:

|

2020-05-15 15:05:25 -04:00

|

|

|

old_room_id: The room ID of the old room.

|

|

|

|

|

new_room_id: The room ID of the new room.

|

|

|

|

|

user_id: The user's ID.

|

2019-01-25 06:09:34 -05:00

|

|

|

"""

|

2019-01-25 06:48:38 -05:00

|

|

|

# Retrieve user account data for predecessor room

|

2023-02-10 09:22:16 -05:00

|

|

|

user_account_data = await self.store.get_global_account_data_for_user(user_id)

|

2019-01-25 06:09:34 -05:00

|

|

|

|

2019-01-25 06:48:38 -05:00

|

|

|

# Copy direct message state if applicable

|

2020-10-05 09:28:05 -04:00

|

|

|

direct_rooms = user_account_data.get(AccountDataTypes.DIRECT, {})

|

2019-01-25 06:48:38 -05:00

|

|

|

|

|

|

|

|

# Check which key this room is under

|

|

|

|

|

if isinstance(direct_rooms, dict):

|

|

|

|

|

for key, room_id_list in direct_rooms.items():

|

|

|

|

|

if old_room_id in room_id_list and new_room_id not in room_id_list:

|

|

|

|

|

# Add new room_id to this key

|

|

|

|

|

direct_rooms[key].append(new_room_id)

|

|

|

|

|

|

|

|

|

|

# Save back to user's m.direct account data

|

2021-01-18 10:47:59 -05:00

|

|

|

await self.account_data_handler.add_account_data_for_user(

|

2020-10-05 09:28:05 -04:00

|

|

|

user_id, AccountDataTypes.DIRECT, direct_rooms

|

2019-01-25 06:48:38 -05:00

|

|

|

)

|

|

|

|

|

break

|

|

|

|

|

|

|

|

|

|

# Copy room tags if applicable

|

2020-05-15 09:32:13 -04:00

|

|

|

room_tags = await self.store.get_tags_for_room(user_id, old_room_id)

|

2019-01-25 06:09:34 -05:00

|

|

|

|

2019-01-25 06:48:38 -05:00

|

|

|

# Copy each room tag to the new room

|

|

|

|

|

for tag, tag_content in room_tags.items():

|

2021-01-18 10:47:59 -05:00

|

|

|

await self.account_data_handler.add_tag_to_room(

|

|

|

|

|

user_id, new_room_id, tag, tag_content

|

|

|

|

|

)

|

2019-01-25 06:21:25 -05:00

|

|

|

|

2020-05-01 10:15:36 -04:00

|

|

|

async def update_membership(

|

2016-03-31 08:08:45 -04:00

|

|

|

self,

|

2020-05-15 15:05:25 -04:00

|

|

|

requester: Requester,

|

|

|

|

|

target: UserID,

|

|

|

|

|

room_id: str,

|

|

|

|

|

action: str,

|

|

|

|

|

txn_id: Optional[str] = None,

|

|

|

|

|

remote_room_hosts: Optional[List[str]] = None,

|

|

|

|

|

third_party_signed: Optional[dict] = None,

|

|

|

|

|

ratelimit: bool = True,

|

|

|

|

|

content: Optional[dict] = None,

|

2021-10-06 10:32:16 -04:00

|

|

|

new_room: bool = False,

|

2020-05-15 15:05:25 -04:00

|

|

|

require_consent: bool = True,

|

2021-06-22 05:02:53 -04:00

|

|

|

outlier: bool = False,

|

Fix historical messages backfilling in random order on remote homeservers (MSC2716) (#11114)

Fix https://github.com/matrix-org/synapse/issues/11091

Fix https://github.com/matrix-org/synapse/issues/10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`)

1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`)

- Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)).

- Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort.

- This will be even more the case when we add the [MSC3030 jump to date API endpoint](https://github.com/matrix-org/matrix-doc/pull/3030) so the static archives can navigate and jump to a certain date.

- We're solving this in the future by switching to [online topological ordering](https://github.com/matrix-org/gomatrixserverlib/issues/187) and [chunking](https://github.com/matrix-org/synapse/issues/3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking

2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order.

3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes https://github.com/matrix-org/synapse/issues/11091 and https://github.com/matrix-org/synapse/issues/10764

4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls.

- Related discussion, https://github.com/matrix-org/synapse/pull/11114#discussion_r741514793

Before | After

--- | ---

|

#### Why aren't we sorting topologically when receiving backfill events?

> The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) which could change whatever implementation anyway.

>

> As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering.

>

> -- https://github.com/matrix-org/synapse/pull/11114#discussion_r741517138

See https://github.com/matrix-org/synapse/pull/11114#discussion_r739610091 for the technical difficulties

2022-02-07 16:54:13 -05:00

|

|

|

allow_no_prev_events: bool = False,

|

2021-06-22 05:02:53 -04:00

|

|

|

prev_event_ids: Optional[List[str]] = None,

|

2022-03-25 10:21:06 -04:00

|

|

|

state_event_ids: Optional[List[str]] = None,

|

2022-07-13 14:32:46 -04:00

|

|

|

depth: Optional[int] = None,

|

2022-10-03 09:30:45 -04:00

|

|

|

origin_server_ts: Optional[int] = None,

|

2020-07-09 08:01:42 -04:00

|

|

|

) -> Tuple[str, int]:

|

2020-08-20 15:07:42 -04:00

|

|

|

"""Update a user's membership in a room.

|

|

|

|

|

|

|

|

|

|

Params:

|

|

|

|

|

requester: The user who is performing the update.

|

|

|

|

|

target: The user whose membership is being updated.

|

|

|

|

|

room_id: The room ID whose membership is being updated.

|

|

|

|

|

action: The membership change, see synapse.api.constants.Membership.

|

|

|

|

|

txn_id: The transaction ID, if given.

|

|

|

|

|

remote_room_hosts: Remote servers to send the update to.

|

|

|

|

|

third_party_signed: Information from a 3PID invite.

|

|

|

|

|

ratelimit: Whether to rate limit the request.

|

|

|

|

|

content: The content of the created event.

|

2021-10-06 10:32:16 -04:00

|

|

|

new_room: Whether the membership update is happening in the context of a room

|

|

|

|

|

creation.

|

2020-08-20 15:07:42 -04:00

|

|

|

require_consent: Whether consent is required.

|

2021-06-22 05:02:53 -04:00

|

|

|

outlier: Indicates whether the event is an `outlier`, i.e. if

|

|

|

|

|

it's from an arbitrary point and floating in the DAG as

|

|

|

|

|

opposed to being inline with the current DAG.

|

Fix historical messages backfilling in random order on remote homeservers (MSC2716) (#11114)

Fix https://github.com/matrix-org/synapse/issues/11091

Fix https://github.com/matrix-org/synapse/issues/10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`)

1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`)

- Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)).

- Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort.

- This will be even more the case when we add the [MSC3030 jump to date API endpoint](https://github.com/matrix-org/matrix-doc/pull/3030) so the static archives can navigate and jump to a certain date.

- We're solving this in the future by switching to [online topological ordering](https://github.com/matrix-org/gomatrixserverlib/issues/187) and [chunking](https://github.com/matrix-org/synapse/issues/3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking

2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order.

3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes https://github.com/matrix-org/synapse/issues/11091 and https://github.com/matrix-org/synapse/issues/10764

4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls.

- Related discussion, https://github.com/matrix-org/synapse/pull/11114#discussion_r741514793

Before | After

--- | ---

|

#### Why aren't we sorting topologically when receiving backfill events?

> The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) which could change whatever implementation anyway.

>

> As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering.

>

> -- https://github.com/matrix-org/synapse/pull/11114#discussion_r741517138

See https://github.com/matrix-org/synapse/pull/11114#discussion_r739610091 for the technical difficulties

2022-02-07 16:54:13 -05:00

|

|

|

allow_no_prev_events: Whether to allow this event to be created an empty

|

|

|

|

|

list of prev_events. Normally this is prohibited just because most

|

|

|

|

|

events should have a prev_event and we should only use this in special

|

2023-06-16 15:12:24 -04:00

|

|

|

cases (previously useful for MSC2716).

|

2021-06-22 05:02:53 -04:00

|

|

|

prev_event_ids: The event IDs to use as the prev events

|

2022-03-25 10:21:06 -04:00

|

|

|

state_event_ids:

|

2023-06-16 15:12:24 -04:00

|

|

|

The full state at a given event. This was previously used particularly

|

|

|

|

|

by the MSC2716 /batch_send endpoint. This should normally be left as

|

|

|

|

|

None, which will cause the auth_event_ids to be calculated based on the

|

|

|

|

|

room state at the prev_events.

|

2022-07-13 14:32:46 -04:00

|

|

|

depth: Override the depth used to order the event in the DAG.

|

|

|

|

|

Should normally be set to None, which will cause the depth to be calculated

|

|

|

|

|

based on the prev_events.

|

2022-10-03 09:30:45 -04:00

|

|

|

origin_server_ts: The origin_server_ts to use if a new event is created. Uses

|

|

|

|

|

the current timestamp if set to None.

|

2020-08-20 15:07:42 -04:00

|

|

|

|

|

|

|

|

Returns:

|

|

|

|

|

A tuple of the new event ID and stream ID.

|

|

|

|

|

|

|

|

|

|

Raises:

|

|

|

|

|

ShadowBanError if a shadow-banned requester attempts to send an invite.

|

|

|

|

|

"""

|

2023-10-06 13:31:52 -04:00

|

|

|

if ratelimit:

|

|

|

|

|

if action == Membership.JOIN:

|

|

|

|

|

# Only rate-limit if the user isn't already joined to the room, otherwise

|

|

|

|

|

# we'll end up blocking profile updates.

|

|

|

|

|

(

|

|

|

|

|

current_membership,

|

|

|

|

|

_,

|

|

|

|

|

) = await self.store.get_local_current_membership_for_user_in_room(

|

|

|

|

|

requester.user.to_string(),

|

|

|

|

|

room_id,

|

|

|

|

|

)

|

|

|

|

|

if current_membership != Membership.JOIN:

|

|

|

|

|

await self._join_rate_limiter_local.ratelimit(requester)

|

|

|

|

|

await self._join_rate_per_room_limiter.ratelimit(

|

|

|

|

|

requester, key=room_id, update=False

|

|

|

|

|

)

|

|

|

|

|

elif action == Membership.INVITE:

|

|

|

|

|

await self.ratelimit_invite(requester, room_id, target.to_string())

|

|

|

|

|

|

2020-08-20 15:07:42 -04:00

|

|

|

if action == Membership.INVITE and requester.shadow_banned:

|

|

|

|

|

# We randomly sleep a bit just to annoy the requester.

|

|

|

|

|

await self.clock.sleep(random.randint(1, 10))

|

|

|

|

|

raise ShadowBanError()

|

|

|

|

|

|

2016-08-12 04:32:19 -04:00

|

|

|

key = (room_id,)

|

2016-04-06 10:44:22 -04:00

|

|

|

|

2022-02-16 06:16:48 -05:00

|

|

|

as_id = object()

|

|

|

|

|

if requester.app_service:

|

|

|

|

|

as_id = requester.app_service.id

|

|

|

|

|

|

|

|

|

|

# We first linearise by the application service (to try to limit concurrent joins

|

|

|

|

|

# by application services), and then by room ID.

|

2022-04-05 10:43:52 -04:00

|

|

|

async with self.member_as_limiter.queue(as_id):

|

|

|

|

|

async with self.member_linearizer.queue(key):

|

2023-07-31 05:58:03 -04:00

|

|

|

async with self._worker_lock_handler.acquire_read_write_lock(

|

2023-08-16 10:19:54 -04:00

|

|

|

NEW_EVENT_DURING_PURGE_LOCK_NAME, room_id, write=False

|

2023-07-31 05:58:03 -04:00

|

|

|

):

|

|

|

|

|

with opentracing.start_active_span("update_membership_locked"):

|

|

|

|

|

result = await self.update_membership_locked(

|

|

|

|

|

requester,

|

|

|

|

|

target,

|

|

|

|

|

room_id,

|

|

|

|

|